The Decision Impact Spectrum: A New Framework for AI Risk

The Risk Illusion

Most enterprise AI strategies currently treat model intelligence as a proxy for safety. The underlying assumption is that a more capable model is a safer model. This creates what we call a “risk illusion.”

In reality, risk does not come from how smart the model is. It comes from what happens when the model is wrong.

A hallucinated paragraph in an internal document is a nuisance.

A hallucinated legal recommendation in a contract workflow is real damage.

The same model can be harmless in one context and catastrophic in another. Benchmarks cannot tell you which one you are building.

To build a sustainable framework, leadership must shift focus from what the AI can do to the actual impact of the decisions it is allowed to touch.

Introducing the Decision Impact Spectrum

Effectively managing this shift requires a formal method for assessing how AI influences business outcomes. The Decision Impact Spectrum serves as this foundation.

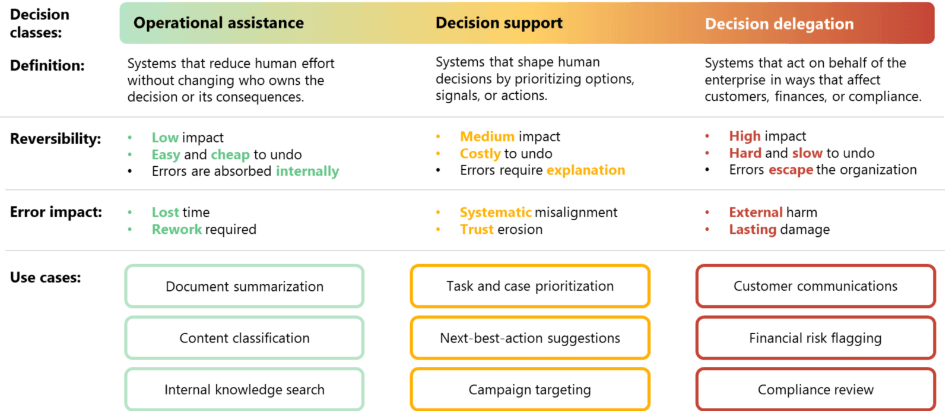

By classifying AI systems into three distinct tiers:

- Operational Assistance

- Decision Support

- Decision Delegation

Leaders can shift their focus from vague discussions about model capability to a concrete understanding of ownership. This framework provides the necessary vocabulary to determine exactly how much governance, oversight, and human intervention is required for any given project.

The Efficiency Layer: Operational Assistance

Operational Assistance represents the foundation of the spectrum. These systems focus on internal productivity and personal efficiency through tasks such as simple classification, document summarization, and internal search. Because the output remains inside the organization and is intended for internal use only, the risk profile is low. The primary consequence of an error is the time needed for an employee to fix it, resulting in internal rework rather than external liability.

At this stage, the individual user functions as the final guardrail, and heavy technical governance is typically not required.

The Collaboration Layer: Decision Support

The risk profile increases as AI moves from a private productivity tool to a collaborative partner. In the Decision Support tier, the system generates complex outputs that shape human choices by prioritizing options or signals. Key examples from this category include task and case prioritization, next-best-action suggestions, and campaign targeting.

At this level, the stakes change as AI begins to quietly influence expert judgment.

Failures result in systematic misalignment and the erosion of organizational trust, consequences that cannot be fixed with simple internal rework.

This requires a shift toward standardized operating procedures to ensure human oversight is rigorous, consistent, and fully documented.

The Autonomous Layer: Decision Delegation

The final tier of the spectrum occurs when an AI system is authorized to act on behalf of the organization without immediate human approval. Specific use cases include autonomous customer service agents, compliance/legal review, and fraud prevention.

Because these actions are external and happen in real-time, the consequences of a mistake shift from internal friction to external liability and lasting brand damage. Errors at this level are often immediate and difficult to reverse, creating a level of risk that manual oversight alone cannot mitigate.

Managing this tier requires a fundamental change in strategy, moving away from simple guardrails toward structural platform controls and immutable audit trails that ensure every autonomous action is traceable and defensible.

Measuring the Blast Radius

To determine where a project sits on this spectrum, leadership must quantify the potential fallout of a system failure. This represents the AI’s Blast Radius, a metric that asks a fundamental question:

“If this AI makes a mistake, how far does the damage spread and how hard is it to contain?”

- Operational Assistance: A negligible blast radius. Errors are confined to a single user’s desk and result in minor internal rework.

- Decision Support: A moderate blast radius. Errors spread across departments, influencing professional choices and eroding organizational trust.

- Decision Delegation: A maximum blast radius. Errors hit the market in real time, carrying permanent legal, financial, or compliance consequences.

Without the right governance, an error in a high-impact tier does not just stall a workflow. It can explode into a legal or financial crisis that impacts the entire enterprise.

By assessing this radius before a tool is deployed, leadership can accurately assign the necessary level of technical and legal oversight.

Defining the Class before the Model

The ultimate goal of this framework is to move AI strategy from a technical discussion to a leadership responsibility. Every project in an enterprise pipeline should be assigned a decision class before a single line of code is written or a model is selected.

Defining the impact of the decision determines the requirements for the platform, the necessity of human oversight, and the level of risk the board is willing to accept.

When organizations lead with the decision rather than the technology, they stop chasing model benchmarks and start building resilient, accountable systems.